Research Topics

Fast Blind Source Separation

Blind source separation (BSS) is a signal processing techinque to separate a mixture without any a priori information such as source directions or positions. So far, independent component analysis (ICA) and independent vector analysis (IVA) has been developed as typical methods for BSS, but it needed much iterative computation. To solve this problem, Prof. Ono has proposed innovative algorithms named auxiliary-function-based ICA (AuxICA) [Ono2010LVAICA], auxiliary-function-based IVA (AuxIVA) [Ono2011WASPAA], that are stable and more than 20 times faster than conventional algorithms. Adding to it, he implemented BSS as an iPhone app first in the world and demonstrated that the algorithm separated a stereo mixture into two sources with 1/5 of real time on iPhone [Ono2012IWAENC][Ono2012EUSIPCO]。

Recently, we have extended them to independent low-rank matrix analysis (ILRMA) [Kitamura2015ICASSP][Kitamura2016TASLP]、independent deeply-learned matrix analysis (IDLMA). Also, aiming to real-world application, we have worked on online algorithm [Taniguchi2014HSCMA] and low-latency algorithm [Sunohara2017ICASSP]as well.

Related Link

SPIE.DSS 2015 Unsupervised Learning ICA Pioneer Award (Apr. 23, 2015)

Press Release: "New acoustic signal processing technology recognizes multiple speech simultaneously" (in Japanese, Sep. 29, 2016)

Award of supervised student (Daichi Kitamura): JSPS Ikushi Award (Jan. 31, 2017)

ICASSP 2018 Tutorial Talk (Apr. 16, 2018)

Asynchronous Distributed Microphone Array

In conventional array signal processing, tiny time difference of arrival (e.g. 0.1 ms) between observed signals by multiple microphones is an important cue for source localization or separation. Therefore, it is necessary that all channels should be synchronous. While, in our daily life, we use various kinds of devices with sound recording function (PC, smart phone, voice recorder, video camera, etc.). For enabling us to exploit such asynchronous devices for array signal processing, we have developed new signal processing technique. One of the typical scenario is as follows: Participants to a meeting record sound in the meeting with their own smart phones and upload it to a cloud server after the meeting. Then, their sounds are automatically synchronized, source separation and speech recognition are applied, and finally, the minute is sent to all the participants by e-mail.

(Demonstration)

Related Publications

- Keisuke Imoto and Nobutaka Ono, "Spatial Cepstrum as a Spatial Feature Using Distributed Microphone Array for Acoustic Scene Analysis," IEEE/ACM Trans. Audio, Speech and Language Processing, vol. 25, no. 6, pp. 1335-1343, June, 2017.

- Trung-Kien Le and Nobutaka Ono, "Closed-Form and Near Closed-Form Solutions for TDOA-based Joint Source and Sensor Localization," IEEE Trans. Signal Processing, vol. 65, no.5, pp. 1207-1221, Mar. 2017.

- Trung-Kien Le and Nobutaka Ono, "Closed-form and Near closed-form Solutions for TOA-based Joint Source and Sensor Localization," IEEE Trans. Signal Processing, vol. 64, no. 18, pp. 4751-4766, Sept. 2016.

- Keiko Ochi, Nobutaka Ono, Shigeki Miyabe and Shoji Makino, "Multi-talker Speech Recognition Based on Blind Source Separation with Ad hoc Microphone Array Using Smartphones and Cloud Storage," Proc. Interspeech, pp. 3369-3373, Sept. 2016.

- Trung-Kien Le, Nobutaka Ono, Thibault Nowakowski, Laurent Daudet and Julien De Rosny, "Experimental Validation of TOA-based Methods for Microphones Array," Proc. ICASSP, pp. 3216-3220, Mar. 2016.

- Trung-Kien Le and Nobutaka Ono, "Reference-Distance Estimation Approach for TDOA-based Source and Sensor Localization," Proc. ICASSP, pp. 2549-2553, Apr. 2015.

- Shigeki Miyabe, Nobutaka Ono and Shoji Makino, "Blind Compensation of Interchannel Sampling Frequency Mismatch for Ad hoc Microphone Array Based on Maximum Likelihood Estimation," Elsevier Signal Processing vol. 107, pp. 185-196, Feb. 2015. (available online)

- Hironobu Chiba, Nobutaka Ono, Shigeki Miyabe, Yu Takahashi, Takeshi Yamada and Shoji Makino, "Amplitude-based speech enhancement with nonnegative matrix factorization for asynchronous distributed recording, " Proc. IWAENC, pp. 204-208, Sept. 2014.

- Shigeki Miyabe, Nobutaka Ono and Shoji Makino, "Optimizing Frame Analysis with Non-Integer Shift for Sampling Mismatch Compensation of Long Recording" Proc. WASPAA, Oct. 2013.

- Shigeki Miyabe, Nobutaka Ono and Shoji Makino, "Blind compensation of inter-channel sampling frequency mismatch with maximum likelihood estimation in STFT domain," Proc. ICASSP, pp.674-678, May 2013.

- Takuma Ono, Shigeki Miyabe, Nobutaka Ono and Shigeki Sagayama, "Blind Source Separation with Distributed Microphone Pairs Using Permutation Correction by Intra-pair TDOA Clustering," Proc. IWAENC, Aug., 2010.

- Nobutaka Ono, Hitoshi Kohno, Nobutaka Ito and Shigeki Sagayama, "Blind Alignment of Asynchronously Recorded Signals for Distributed Microphone Array," Proc. WASPAA, pp.161-164, Oct. 2009.

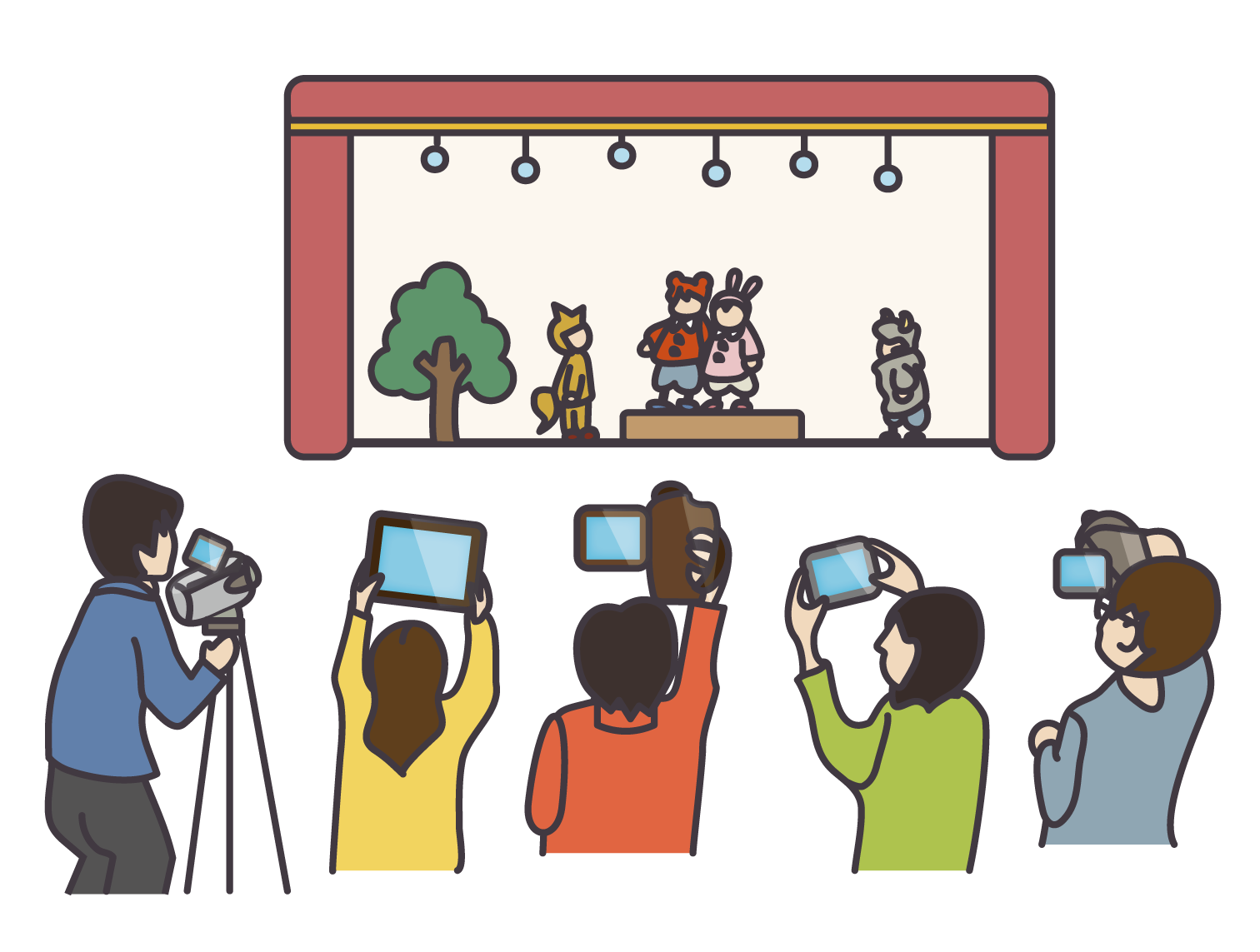

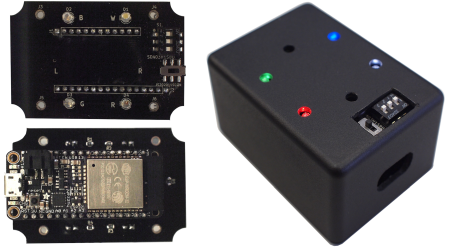

Distributed acoustic sensing using "blinky" sound-to-light conversion devices

"Seeing sound through light" is the overarching goal of our new acoustic information processing research project. Concretely, we developed the "blinky", a small, battery-powered, sound-to-light conversion device equipped with a microphone and an LED. This device can be distributed in large numbers over a vast area. Unlike conventional microphone arrays, no cables or radio network is required. Instead, the acquisition of the sound power from all devices is carried out with a video camera. We are currently investigating the use of this system for sound source localization, beamforming, and sound scene analysis. As an example, we successfully used a 101 blinkies to locate a moving sound source in a 34 x 29 m^2 gymnastic hall with reverberation time (RT60) of over 1.5 s (pictured below).

Crystal-shaped Microphone Array

Diffuse noise arrived from various directions in surroundings is difficult to suppress in the conventional array signal processing techniques. We have studied noise suppression technique for that using a special configuration of microphones referred "crystal-shape", which has high symmetry. The possible symmetry configurations are derived from the group theory of mathematics.

Related Publications

- Nobutaka Ito, Hikaru Shimizu, Nobutaka Ono, and Shigeki Sagayama, "Diffuse Noise Suppression Using Crystal-shaped Microphone Arrays," IEEE Trans. on Audio, Speech and Language Processing, vol. 19, no. 7, pp. 2101-2110, Sep. 2011

- Nobutaka Ito, Emmanuel Vincent, Nobutaka Ono, Remi Gribonval, and Shigeki Sagayama, "Crystal-MUSIC: Accurate Localization of Multiple Sources in Diffuse Noise Environments Using Crystal-Shaped Microphone Arrays," Proc. LVA/ICA, pp. 81-88, Sept. 2010.

- Nobutaka Ono, Nobutaka Ito and Shigeki Sagayama, "Five Classes of Crystal Arrays for Blind Decorrelation of Diffuse Noise," Proc. SAM, pp. 151-154, Jul. 2008.